In the ever-evolving landscape of the modern workplace, AI stands as both a beacon of potential and a subject of debate. As organizations increasingly integrate AI into their operations, the implications of this technology are profound, reshaping the very fabric of how we work. While the promise of AI to enhance efficiency and innovation is becoming more clear, it brings with it a host of challenges that warrant closer examination.

From the way AI is transforming job roles to its impact on data privacy, creativity, and employee skillsets, there’s a need to balance its benefits with the potential drawbacks. The issue of job displacement looms large as automation becomes more prevalent, particularly in sectors like manufacturing and customer service. Meanwhile, the dependence on technology raises concerns about the erosion of essential human skills, especially when technology does more than just assist a human worker.

Moreover, while AI is revolutionizing many aspects of work, its limitations in replicating human creativity cannot be overlooked. AI’s ability to generate ideas is constrained by its programming and the data it has been trained on. It lacks the nuanced understanding and emotional depth that are the hallmarks of true human creativity.

In this article, we delve into these aspects of AI in the workplace, exploring the delicate interplay between embracing cutting-edge technology and preserving the indispensable human elements of our work culture. As we stand at the crossroads of a technological revolution, understanding these dynamics is crucial for navigating the future of work.

1. Dependence on Technology

Over-reliance on technology can make employees less capable of doing their jobs without that technology. For example, if you rely on AI apps to do something like writing a sales email, you may eventually find you struggle to write a similar email without the assistance of AI.

To mitigate this issue, it’s important to adopt a more integrated approach where AI is used as a tool for enhancement rather than replacement. For example, instead of completely depending on AI to write a sales email, employees could draft the initial version themselves and then use AI for suggestions on improvements or edits. This approach ensures that the primary task and the critical thinking involved remain in the hands of the employee, with AI serving as a support system to enhance the quality of the output.

Moreover, engaging in a collaborative process with AI—akin to seeking feedback from a colleague—not only maintains skill levels but also fosters a deeper understanding of the task at hand. Employees who edit and refine their work after AI-assisted suggestions are more likely to retain and even enhance their skills. This method also encourages a more thoughtful use of AI, wherein employees critically assess AI-generated content, understanding that while AI can provide valuable insights, it may not fully grasp the nuances and context-specific details that a human can.

2. Data Privacy Concerns

While most of the companies creating AI technology are taking care to maintain data privacy and security, no system is 100% secure. The data you feed to an AI app may eventually become exposed via a data breach.

To safeguard against these risks, there are several proactive measures that can be taken:

- Choose Reputable AI Providers: It’s essential to select AI systems from reputable and trustworthy companies. This involves conducting thorough research to understand their privacy policies, security measures, and track record in handling data. Companies should look for vendors that adhere to international data protection regulations, employ robust encryption methods, and have a transparent approach to data usage.

- Be Cautious with Data Sharing: Exercise caution regarding the type of data shared with AI systems. Sensitive information, whether personal or corporate, should be shared sparingly and only when absolutely necessary. Companies should establish clear guidelines on what type of data can be fed into AI systems, especially when dealing with customer information or intellectual property.

- Regular Audits and Updates: Regularly auditing the AI tools in use for any potential vulnerabilities and ensuring that all software is up to date with the latest security patches can significantly reduce the risk of a breach.

- Employee Training and Awareness: Employees should be trained in best practices for data privacy, including understanding the types of data that are at higher risk and the importance of adhering to company policies when using AI tools.

- Data Anonymization Techniques: When possible, use data anonymization techniques before feeding it into AI systems. This reduces the risk of sensitive information being compromised.

- Limit AI Access to Data: Implementing strict access controls and permissions within AI systems can limit their access to only the data necessary for the specific tasks they are designed to perform.

- Regularly Review and Update Privacy Policies: Companies should continually review and update their data privacy policies to reflect the evolving nature of AI technologies and the changing regulatory landscape.

- Incident Response Plan: Having a robust incident response plan in place in case of a data breach is crucial. This plan should include steps to mitigate the damage, inform affected parties, and prevent future occurrences.

Depending on your exact role within a company, not all of these things may fall on you to handle. But knowing which policies your company has in place and when they might be triggered is vital to the responsible use of AI.

3. Limitations on True Creativity

AI, as it currently stands, cannot truly compete with human creativity. While AI systems can generate ideas and concepts by analyzing and synthesizing vast datasets, their creative output is ultimately constrained by the extent and nature of the data they have been trained on. Human creativity often involves making leaps of intuition, drawing on deeply personal experiences, and synthesizing disparate ideas in novel ways—aspects that are currently beyond the reach of AI.

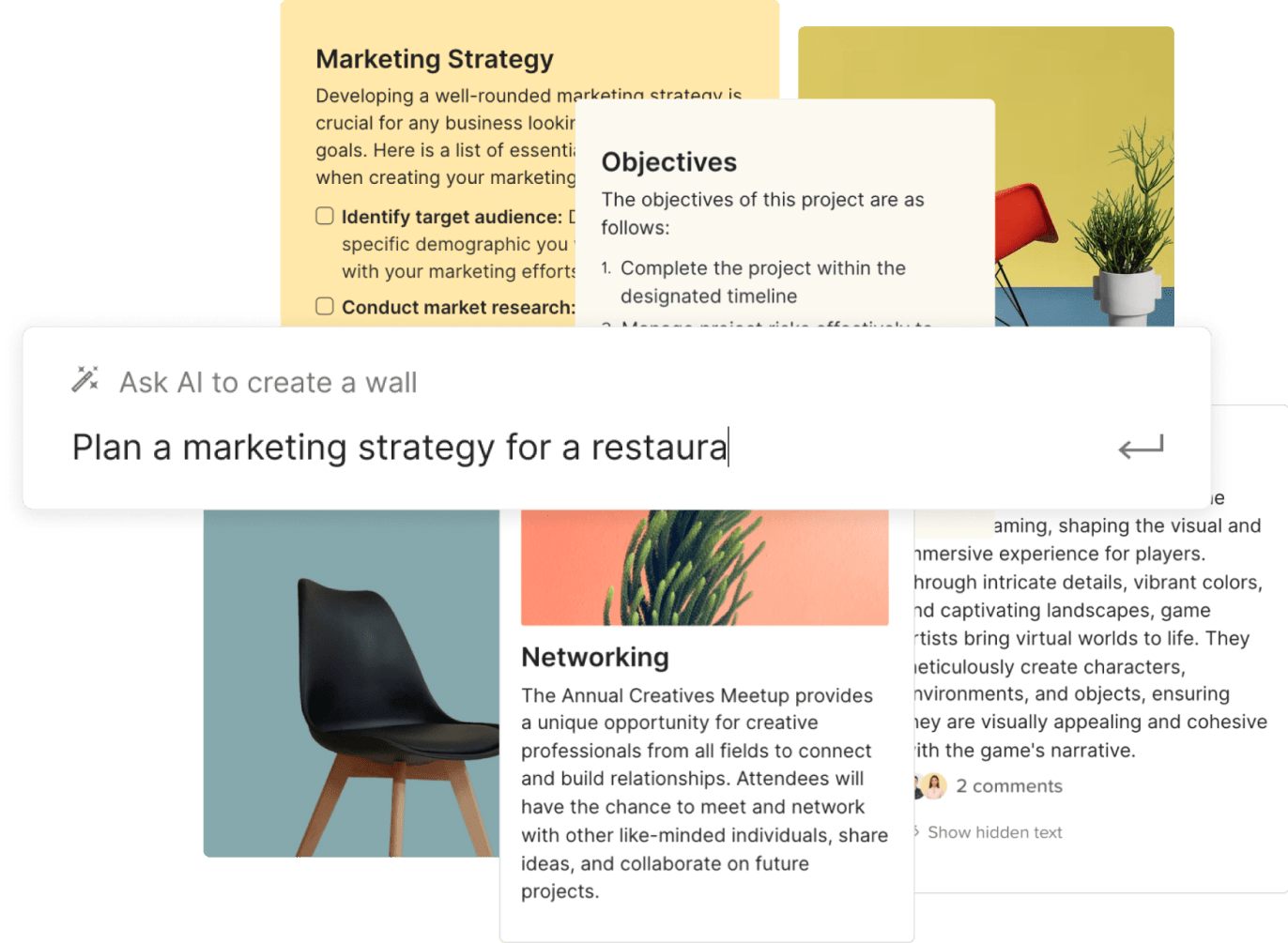

That said, AI can be an excellent brainstorming tool to assist you with coming up with new ideas. You can ask AI to expand on ideas you’ve already come up with, or to come up with ideas based on specific criteria you can then run with. Here are a few ways you can use AI for coming up with or testing new ideas:

- Idea Expansion: You can feed initial concepts or partial ideas into an AI, which can then generate a range of expansions on these themes. This process can uncover angles or perspectives you might not have considered.

Example Prompt:

I have an idea for [idea]. [Explain the basic idea.] What are some ways that I could expand on that idea or make it more useful?

- Generating Options Based on Criteria: AI can produce ideas or solutions based on specific parameters or criteria you set. This can be particularly useful in fields like design, where you might ask AI to generate concepts that meet certain aesthetic or functional requirements.

Example Prompt:

I’m creating a flyer for an open mic night at a local bar and need a photo-realistic background image of a musician on stage playing the guitar.

- Cross-Pollination of Ideas: AI can draw from a diverse range of sources and disciplines to suggest ideas that might take time to develop through conventional brainstorming. For instance, it could link concepts from art and science to inspire new approaches in either field.

- Overcoming Creative Blocks: When you’re stuck in a creative rut, AI can offer unexpected suggestions that break your usual patterns of thinking, helping to overcome creative blocks. In these cases, I like to use “what if” prompts to get through the block. In other words, ask AI what the results might be if certain things happened.

- Rapid Prototyping: AI can quickly generate prototypes of ideas, allowing for rapid exploration of new concepts without the need for extensive resources.

- Trend Analysis: AI’s ability to analyze current trends can inform your creative process, helping you to create content that is relevant and timely.

Example Prompt:

What are some current trends around UX testing that are likely to become mainstays of the industry?

It’s crucial to approach AI-generated ideas with a critical eye. AI lacks an understanding of cultural nuances, emotional depth, and ethical considerations that are often vital in creative work. The human touch is necessary not only to refine and improve upon these ideas but also to ensure they are appropriate, sensitive, and resonate on a human level.

4. Bias and Inaccuracies

Study after study has shown that large language models have inherent biases that perpetuate biases within human society. These biases show up regularly in the answers that AI apps like ChatGPT produce. Because of this, it’s vital that you carefully consider the answers AI gives regarding certain groups of people within society, particularly marginalized groups.

While these biases show up in written content AI produces, you’ll want to be especially careful with AI-generated images. Racial and ethnic stereotypes are the most common issues you’ll encounter when creating images with AI. But being aware of these biases can help you ensure that the images you use are free of them.

Beyond biases, AI is also not immune to giving inaccurate information. Fact-checking is vital for any content that AI produces. One of the simplest ways to avoid inaccuracies is within your prompt. Telling AI what sources to use for data and explicitly telling it not to make up answers (as strange as that sounds) actually works well in preventing factual errors.

5. Ethical Concerns

There are a lot of ethical concerns surrounding AI, from the data (aka, original works of art) it’s trained on to whether or not it’s necessary to disclose when a piece of work is generated using AI.

Some of the biggest concerns are around the artworks that were used to train most of the visual generative AI platforms. The original creators of those works of art were not compensated for the use of their art to train these platforms, which has brought up questions of whether copyright violations occurred. While there has been no definitive decision on that, it has created a large rift within and around the art community. Some artists are fine with their art being used to train AI while many others are not. Some also question whether AI art will make it difficult for human artists to earn a living with their art.

One other thing that must be considered when using AI is that AI-generated works cannot be copyrighted. That means if you’re publishing an article, a book, a piece of art, or anything else that was primarily generated with AI, anyone else is free to use it without legal repercussions. The solution to this, of course, is that make sure that AI-generated work is just the starting point and that the final product has substantial human input. Exactly how much human input is required before a work is considered copyrightable is still up in the air.

Beyond that, should you disclose when AI has been used in creating an article, book, or piece of art? (Full disclosure: I used ChatGPT in the outlining and editing process for this article.) Do you need to disclose if AI has been used at all, or only if it’s been used to a significant extent? And how prominent does that disclosure need to be? These are all questions that you’ll need to use your own ethical compass to determine answers to.

6. Not Every Employer Allows It

Not every employer is okay with their employees using AI tools or only allows them under certain circumstances. Using generative AI while working for those employers could result in disciplinary action or losing your job entirely.

Beyond that, though, if you become too reliant on AI to do your job effectively, it may limit what employers you can work for in the future. While some companies embrace AI as a way to get work done more efficiently or to a higher standard of quality, others most certainly do not. Be sure that you keep your skills sharp and up to date without the use of AI if you want to remain competitive in the job market.

7. You Might Be Training Your Replacement

Every time we use AI, we’re making it better. That’s the point: it learns from each interaction and becomes more efficient and more accurate (at least in theory). So each time we use AI to do our work better, we’re effectively teaching it to do our work.

A number of industries and jobs are particularly vulnerable to being replaced at least in part by AI. These include many jobs in the tech industry, such as software development, UI and UX design, and even product design. Other jobs are also at risk: data entry, finance and banking roles, customer service, transportation and logistics (especially once autonomous vehicles become a widespread reality), and even certain aspects of healthcare.

Granted, most of these industries will still have some human employees, though those employees may be tasked more with quality assurance for what the AI systems do. Learning how to use AI effectively helps increase your job security.

Conclusion: Embracing a Future Augmented by AI

As we navigate the complex terrain of AI usage in the workplace, it becomes clear that this technology is not a panacea for all challenges, nor is it an ominous harbinger of a dystopian future work environment. Instead, AI presents a nuanced landscape, ripe with opportunities for enhancement and growth, as well as areas requiring careful consideration and ethical vigilance.

The future of work in an AI-augmented world is not about the replacement of human ingenuity but rather its enhancement. AI’s role as a collaborative tool, improving human creativity and efficiency, underscores the importance of a synergistic relationship between technology and human skill. The potential of AI to transform job roles and industry sectors is vast, yet it is equally matched by the necessity to adapt, retrain, and evolve our workforce to harness this potential responsibly.